In a first in the US, a driver has been charged with vehicular manslaughter after his Tesla killed two people while Autopilot was activated.

The Washington Post reports Kevin George Aziz Riad, 27, was behind the wheel of a Tesla Model S on December 29, 2019, travelling at a high speed, when the vehicle left a freeway and ran a red light in the Los Angeles suburb of Gardena.

It collided with a Honda Civic occupied by Gilberto Alcazar Lopez and Maria Guadalupe Nieves-Lopez. They died at the scene.

Riad and his passenger were hospitalised with non-life threatening injuries. He has subsequently pleaded not guilty and is currently free on bail.

A preliminary hearing will take place on February 23.

While the criminal charging documents don’t explicitly mention Autopilot, investigators from the National Highway Traffic Safety Administration (NHTSA) confirmed last week Autopilot was in use at the time of the crash.

The victims’ families are also pursuing civil action, suing Riad for negligence and citing numerous driving infringements on his record, and suing Tesla for selling vehicles that can accelerate suddenly and don’t have an effective autonomous emergency braking system.

A joint trial is scheduled for mid-2023.

The Washington Post reports this is the first time a person has been charged with a felony in the US for a fatal crash where they have been using a partially automated driving system.

The driver of an Uber autonomous test vehicle, Rafaela Vasquez, was charged in 2020 with negligent homicide after her vehicle fatally struck a pedestrian in Phoenix, Arizona in 2018. Prosecutors haven’t filed charges against Uber, and Vasquez has pleaded not guilty.

Both the NHTSA and the National Transportation Safety Board (NTSB) are currently investigating the alleged misuse of Autopilot.

The NHTSA has reportedly sent investigation teams to 26 crashes involving Autopilot since 2016, which have resulted in at least 11 deaths.

The NTSB found a 2018 non-fatal crash in Culver City, California was likely the result of the driver’s inattention and overreliance on Autopilot.

In this incident, the Tesla’s systems detected the driver’s hands on the wheel for just 78 seconds of the 29 minutes he had Autopilot activated for. The driver’s vehicle collided into a stationary fire truck with its lights on, but survived.

Even in that case, however, the NTSB criticised Tesla for designing a system that “permitted the driver to disengage from the driving task”.

In another 2018 collision, the NTSB found the driver of a Model X on Autopilot was likely distracted by a mobile phone game when he collided with a barrier which hadn’t been reported or repaired by the relevant municipal authorities.

However, it also said “Tesla vehicle’s ineffective monitoring of driver engagement was determined to have contributed to the crash”.

After this finding, the authority recommended in 2020 the NHTSA “evaluate Tesla Autopilot-equipped vehicles to determine if the system’s operating limitations, the foreseeability of driver misuse, and the ability to operate the vehicles outside the intended operational design domain pose an unreasonable risk to safety; if safety defects are identified, use applicable enforcement authority to ensure that Tesla Inc. takes corrective action.”

It also called on the NHTSA to develop a method to verify manufacturers of vehicles with Level 2 autonomous driving technology employ safeguards to limit its use to conditions for which it was designed, and for the NHTSA to develop standards for driver monitoring systems.

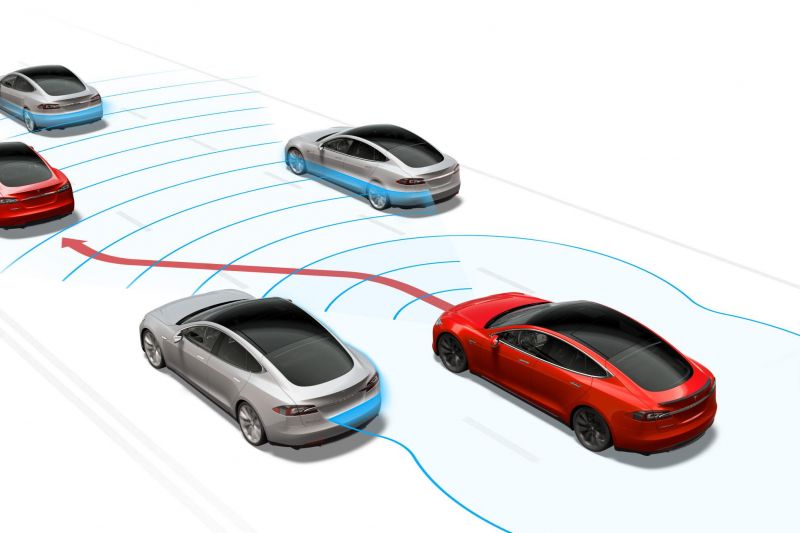

Level 2 autonomous driving, according to the Society of Automotive Engineers, means the vehicle can control both steering and braking/accelerating simultaneously under some circumstances, but the human driver must continue to pay full attention.

MORE: How autonomous is my car? Levels of self-driving explained

An estimated 765,000 Tesla vehicles in the US are equipped with Autopilot.

While Tesla is hardly unique in offering a Level 2 technology, it’s received criticism for its name with a German court ruling the company can’t use the term Autopilot for its technology.

Despite disclaimers from Tesla, the technology has also attracted attention from irresponsible drivers. For example, a California man was arrested in May 2021 for sitting in the back seat as his Tesla drove down a freeway.

Tesla CEO Elon Musk has even said his company’s Full Self-Driving suite, another Level 2 feature, is “not great” though promised it’s improving with each over-the-air update.

When the company rolled out the software to a select group of Beta testers, it warned “it may do the wrong thing at the worst time”.

The so-called Full Self-Driving feature builds on Autopilot by allowing the vehicle to exit a highway, drive around obstacles, and make left or right turns in keeping with the satellite navigation.

Tesla has also been criticised for introducing an Assertive drive mode to FSD, which allows the vehicle to stay in the overtaking lane and roll through stop signs.

MORE: Tesla adds Chill and Assertive modes to ‘Full Self-Driving’

MORE: Tesla concedes Full Self Driving is ‘not great’