US regulators are investigating over a dozen crashes involving Teslas in Autopilot mode striking emergency vehicles, and one outlet has obtained dashcam and police body camera footage plus partial data logs from one.

The Wall Street Journal published footage from a February 27, 2021 crash involving a 2019 Model X, which struck parked emergency vehicles on a highway in Montgomery County, Texas at a speed of 87km/h.

Police vehicles were parked in a lane on the highway performing a traffic stop on another driver, and the crash resulted in injuries to five officers and the hospitalisation of the other driver.

The Tesla only detected a vehicle in its path just 2.5 seconds and 34m before the crash, though it had earlier been able to detect moving non-emergency vehicles at a much greater distance.

While that was a slow response time, the system can’t be entirely to blame as the driver was allegedly under the influence of alcohol.

Not only that, he also allegedly ignored a total of 150 alerts from the Autopilot system in the 45-minute drive leading up to the crash.

These alerts pertained to the driver taking his hands off the wheel, and the first alert chimed less than two minutes after the drive commenced.

The five injured officers are suing Tesla, claiming the Autopilot feature was responsible for the accident; Tesla, in turn, is arguing the fault lies with the driver.

Since this vehicle was produced, Tesla has introduced internal cameras designed to detect inattentiveness, while the company has also rolled out an update designed to improve Autopilot’s ability to detect emergency vehicles.

The US National Highway and Traffic Safety Administration (NHTSA) is investigating 16 crashes between Teslas and emergency vehicles, one of which occurred after the aforementioned update.

The Wall Street Journal says it obtained eight crash reports included in the NHTSA investigation, and in at least six the incidents occurred when emergency vehicle lights were flashing.

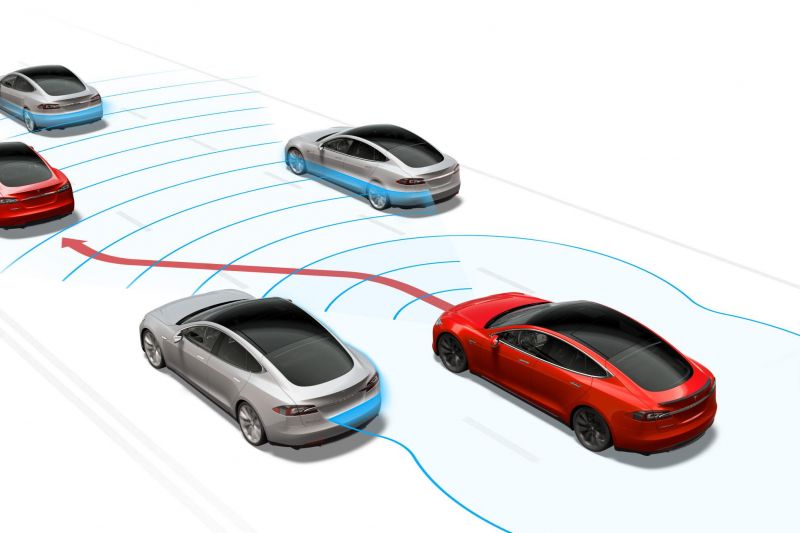

Tesla says its Autopilot system, which is a Level 2 autonomous driving feature, can handle steering and even execute lane change manoeuvres, but requires drivers to pay attention.

Company CEO Elon Musk confirmed earlier this year he’s happy to licence the Autopilot technology, or that of the so-called Full Self-Driving system, to other car companies.

The company has removed ultrasonic sensors and radar from its vehicles in favour of a camera-only set-up it calls Tesla Vision, which powers the two Level 2 autonomous driving features.

In addition to the aforementioned NHTSA investigation, the regulator is also investigating 22 deaths from crashes involving Teslas suspected to be using Autopilot.

The Autopilot and FSD systems have endured a barrage of criticism as well as legal action and regulatory oversight regarding their effectiveness.

Just this year:

- German newspaper Handelsblatt received a massive data dump with thousands of customer complaints about Autopilot from between 2015 and early 2022

- The Washington Post published a story saying Mr Musk had removed radar to cut costs, resulting in an uptick in “phantom braking” reports

- A recall was issued in the US for 362,758 vehicles with FSD Beta as they could “infringe upon local traffic laws”

- The head of Tesla’s Autopilot program testified a 2016 video demonstrating Autopilot was staged

Shareholders also recently filed a proposed class action suit against Tesla in federal court in San Francisco, arguing they had been defrauded by the company with false and misleading statements on technology that “created a serious risk of accident and injury”.

Tesla is also reportedly the subject of a US Department of Justice probe, reportedly examining whether Tesla misled consumers, investors, and regulators by making unsupported claims about the capability of its driver assist technology.

In one reprieve for Tesla, a California jury found in favour of Tesla earlier this year in a case involving its Autopilot system.